Un LLM (Large Language Model – Modello di Linguaggio Avanzato) è un software che è stato addestrato per comprendere e generare testi in modo simile a come lo farebbe un essere umano.

È come un assistente virtuale capace di comprendere il linguaggio naturale e rispondere in modo coerente. L’ormai conosciutissimo ChatGPT di Open AI e Bard di Google sono LLM.

La magia di un LLM sta nell’addestramento, questo processo coinvolge l’esposizione a enormi quantità di testo proveniente da libri, articoli, siti web e molto altro. Il LLM impara le regole del linguaggio, le relazioni tra le parole e persino le sfumature del significato. Un aspetto fondamentale di un LLM è la sua capacità di comprendere il contesto. Non si limita a riconoscere le singole parole, ma analizza l’intera frase per dare un senso coerente alla risposta. Questa abilità è cruciale per garantire risposte pertinenti e comprensibili. Un LLM può produrre articoli, rispondere a domande o persino scrivere storie utilizzando le informazioni apprese durante l’addestramento, il modello crea sequenze di parole che hanno senso all’interno del contesto.

Per comprendere appieno come un LLM riconosce le parole, è essenziale conoscere il processo di addestramento.

Addestramento e tokenizzazione

Durante questa fase, il modello è esposto a una vasta quantità di testi provenienti da libri, articoli, siti web e altro ancora. Questo addestramento gli permette di apprendere le relazioni tra parole, il significato delle frasi e le strutture grammaticali. Una volta caricato il testo viene suddiviso in “token”. Un token può essere una parola, un segno di punteggiatura o anche una lettera. Questa suddivisione permette al modello di analizzare il testo in piccole unità, rendendo più efficiente l’elaborazione delle informazioni.

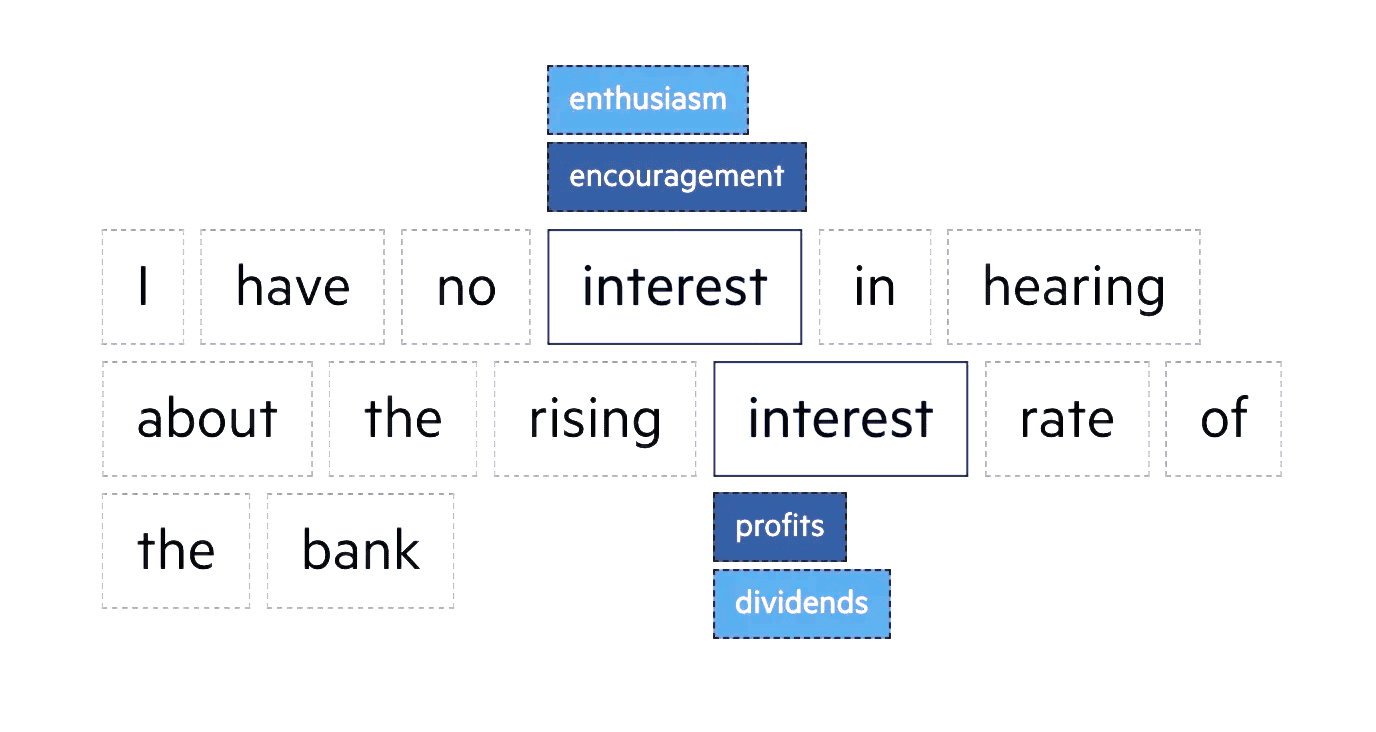

Analisi del contesto

La comprensione del contesto è la chiave per un LLM. Non si limita a riconoscere parole singole, ma analizza l’intero contesto in cui sono inserite. Per fare ciò, considera non solo la parola in questione, ma anche le parole circostanti. Questo gli consente di dare un senso coerente al testo e di generare risposte pertinenti. Ad esempio, se una frase contiene la parola “pressione”, il modello considererà il contesto per determinare se si riferisce a una pressione fisica, atmosferica o psicologica. Questa capacità di interpretazione è fondamentale per evitare ambiguità e garantire risposte precise.

Rappresentazione vettoriale

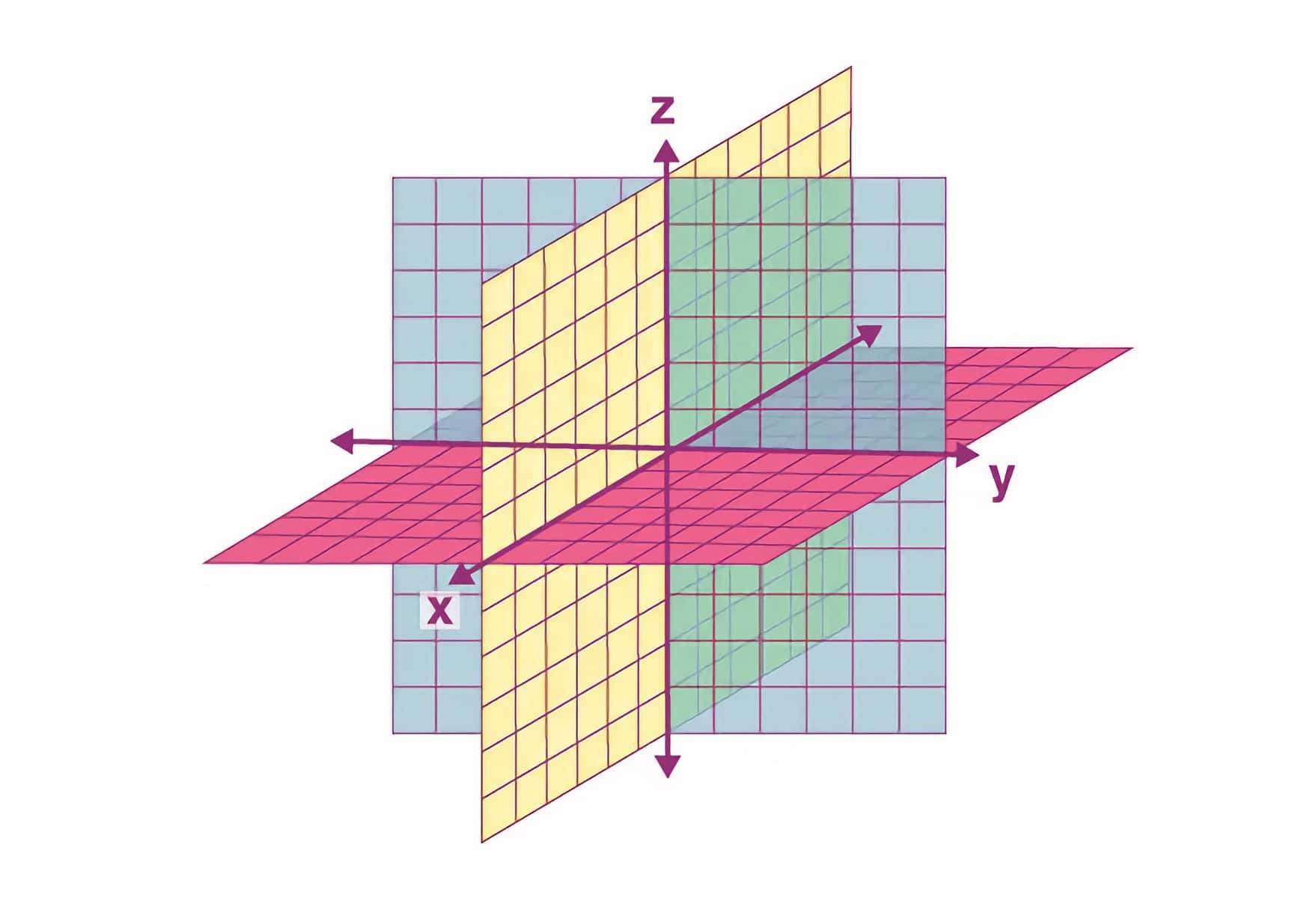

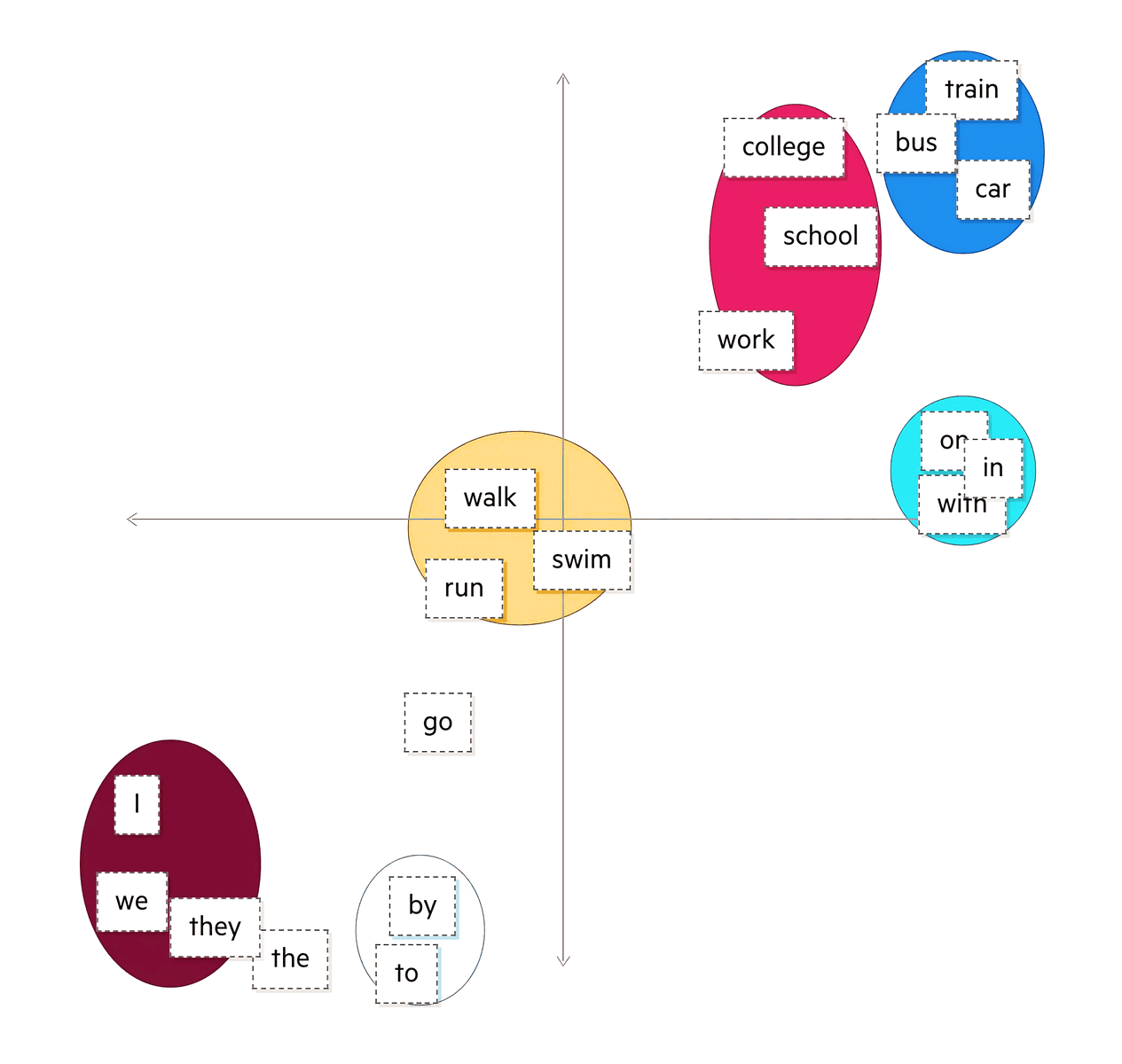

Per operare questa analisi del contesto, un LLM utilizza una tecnica chiamata “rappresentazione vettoriale”. Questo significa che ogni parola viene rappresentata come un vettore numerico in uno spazio multidimensionale. Questi vettori catturano le relazioni semantiche tra le parole.

Ad esempio, parole simili come “cielo” e “terra” avranno rappresentazioni vettoriali vicine nello spazio, poiché sono concetti correlati. Questa rappresentazione numerica delle parole consente al modello di comprendere il significato in modo matematico.

Gli esseri umani rappresentano le parole con delle lettere, C-A-T compone la parola “gatto in inglese”. I modelli di lingua rappresentano le parole con dei numeri, questi sono chiamati vettori di parole, ed ecco un modo per rappresentare “cat” come un vettore:

[0,0074, 0,0030, -0,0105, 0,0742, 0,0765, -0,0011, 0,0265, 0,0106, 0,0191, 0,0038, -0,0468, -0,0212, 0,0091, 0,0030, -0,0563, -0,0396, -0,0998, -0,0796, …, 0,0002]

Le parole sono complesse, e i modelli di lingua conservano ogni parola specifica in uno “spazio delle parole” – un piano con più dimensioni di quanto il cervello umano possa immaginare. Immagina un piano tridimensionale, per avere un senso di quanti punti diversi esistano all’interno di quel piano. Ora aggiungi una quarta, quinta, sesta dimensione.

Considerazioni semantico-sintattiche

Oltre alla semantica, un LLM tiene conto della sintassi. Questo significa che non solo valuta il significato delle parole, ma anche come sono strutturate all’interno della frase. Questo gli consente di generare testi grammaticalmente corretti e coerenti.

L’importanza dell’attenzione

Un altro elemento cruciale è l’uso di meccanismi di “attenzione”. Questo permette al modello di dare maggiore peso a alcune parti del testo rispetto ad altre, in base al contesto. Ad esempio, in una domanda complessa, un LLM presterà maggiore attenzione alle parti più rilevanti per generare una risposta accurata.

Limiti e sfide

Gli LLM a volte, possono generare risposte inaspettate o non del tutto precise. Questo può derivare da comprensioni superficiali o da contesti ambigui. È importante ricordare che, nonostante la loro abilità, gli LLM non hanno l’intuizione umana, almeno per ora.

Gli LLM sono una tecnologia nuova e siamo ancora agli albori del suo sviluppo. All’inizio di ogni nuova tecnologia si tende ad applicarla a contesti già esistenti ma con il tempo vengono sempre trovati usi totalmente nuovi completamente slegati dal passato. Ora vediamo quattro degli innumerevoli modi d’uso degli LLM.

Rendere possibili problemi impossibili

Gli LLM possono prendere qualcosa che gli esseri umani non possono semplicemente fare e renderla fattibile, per esempio sequenziare il DNA. Gli LLM possono tradurre queste sequenze in problemi di linguaggio che diventano risolvibili così per molte altre cose in cui noi semplicemente non “ci arriviamo” anche per la capacità di LLM di attingere a milioni di dati per elaborare una risposta ad hoc, non preconfezionata, cosa che anche il più intelligente essere umano non è in grado di fare.

Rendere meno frustranti i problemi facili

I modelli di linguaggio possono eliminare l’attrito nell’abbinare offerta e domanda come nel mercato dell’usato, così come in altre categorie che richiedono un notevole input umano e scambi di informazioni, ad esempio i marketplace di servizi come la ricerca di un elettricista.

AI verticale

È il modello SaaS che inizia identificando un punto dolente da risolvere per un gruppo di utenti specifico in un mercato specifico; risolvi quel punto dolente, quindi espandi la gamma di prodotti per includere altri problemi; diventa infine il riferimento principale e il negozio unico attraverso il quale quella persona gestisce la sua quotidianità; aggiungi i pagamenti per controllare il flusso di denaro e prendere una commissione. L’AI Verticale è simile: diventa incredibilmente focalizzato nel servire un cliente specifico in un settore specifico e usa questa specializzazione per offrire un prodotto 10 volte migliore. Nell’ambito dell’AI, ciò significa addestrare un modello su dati specifici che creano un ciclo di feedback che porta al miglioramento del prodotto. Recentemente sono state presentate applicazioni AI di aiuto psicologico o che da una foto e descrizione ti danno una diagnosi del tuo problema medico con un grado di accuratezza superiore ai medici (sempre perché l’AI attinge a milioni di dati a cui anche il miglior dottore non ha accesso).

AI copilota

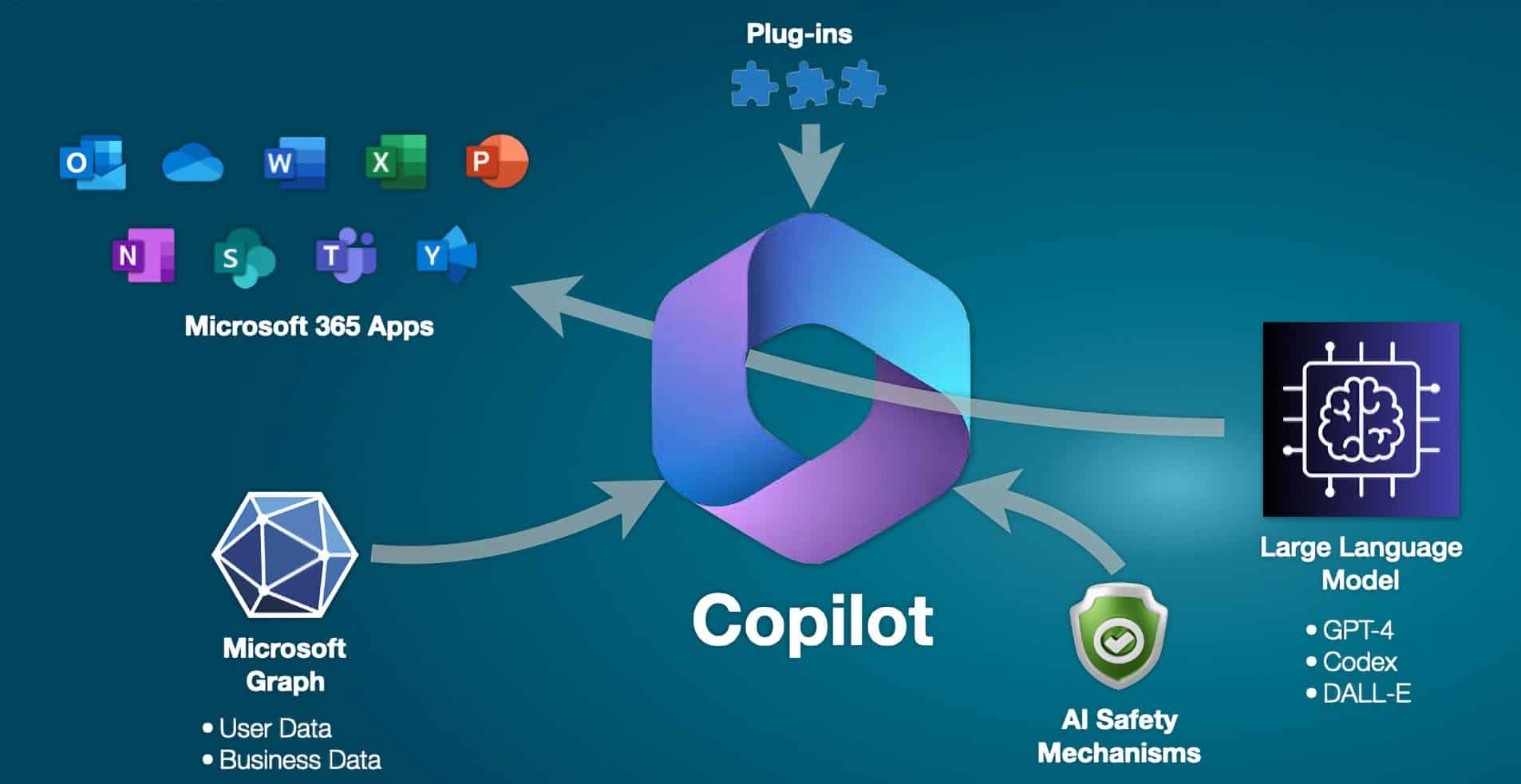

Ognuno avrà un copilota AI che potenzierà la nostra conoscenza e creatività. Prima che l’AI sostituisca direttamente gli esseri umani, li potenzierà. GitHub Copilot che aiuta gli sviluppatori software a scrivere codice è il primo prodotto “copilota” AI ad annunciare pubblicamente di aver superato i $100 milioni di fatturato annuo.

Uno studio recente dell’Harvard Business School ha misurato come gli LLM amplificano le prestazioni dei consulenti. Nell’esperimento di HBS, i consulenti assegnati casualmente ad avere accesso a GPT-4 hanno completato mediamente il 12,2% di compiti in più e lavorato il 25,1% più velocemente. La qualità è migliorata del 40%.

Microsoft ha annunciato che Windows d’ora in avanti sarà basato su un copilota che ci aiuterà in qualsiasi compito dovremmo fare, da scrivere/rispondere ad una email a creare una immagine per una presentazione che sarà impostata automaticamente in base alla descrizione che avremo scritto. Anche Google ha annunciato che sui telefoni Android arriverà presto Bard che ti assisterà in molte funzioni.

Gli LLM presto saranno così presenti nella nostra vita quotidiana che diventeranno sempre più invisibili, avremo con noi sempre un “angelo custode” che ci parlerà (il prossimo grande passo è che questi LLM parleranno come persone vere), consiglierà e ci aiuterà praticamente su qualsiasi cosa. Qui si apre un altro discorso sul fatto che diventeremo sempre più pigri e meno indipendenti dalla tecnologia ma non è questo il contesto dove approfondire questa questione di comunque grande importanza.

In Ex Machina siamo sempre alla ricerca di nuove soluzioni da poter utilizzare nei nostri progetti per realizzare soluzioni personalizzare per aziende ed enti pubblici. Se vuoi scoprire di più sulle nostre soluzioni AI esplora il nostro sito > https://exmachina.ch